By Vittorio Compagno for the Carl Kruse Blog

Look at yourself in the mirror. Can you recognize your hairstyle, your nose, your ears, can you tell if you put on weight, or if it’s a beautiful day? Congratulations. You and the whole human species have this particular feature called consciousness. It’s so unique that when we see a glimpse of it in other animals we have to reevaluate our considerations of that entire world. Yet no animal species have, for example, passed the Turing Test.

Consciousness happens to be one of the greatest indicators of intelligence, a feature so expensive, in evolutionary terms, that only few species on Earth have it, none as much as humans.

“Consciousness is everything you experience. It is the tune stuck in your head, the sweetness of chocolate mousse, the throbbing pain of a toothache, the fierce love for your child, and the bitter knowledge that eventually all feelings will end.” – Scientific American

Consciousness makes you human, a sentient being capable of feeling and thinking. Since the beginning, humans have tried to find similar characteristics in other species. When dogs bark at us for saying something to them, or when they do what we tell them, we think ,”He’s so smart.”

We try to humanize animal behaviors and mental abilities because of our bonds with them. We think sharks are bad because they are scary, rabbits are innocent because they are cute or that cats are presumptuous (though that is actually true). This has had an impact on technology as well since it’s our first “interactive creation” as a species.

Take your smartphone, ask your digital assistant for the time, the weather today, tomorrow, how the stock market is doing… it will answer with a human tone. We like to think the same entities that tell us the time and make phone calls for us are capable of complex thinking, but as soon as we try to have a conversation with our phones, we are left disappointed.

But that won’t always be the case.

The rate at which technology progresses is often described by Moore’s Law. Formulated in 1965 by Gordon Moore, who would, later found Intel, stems from his comment “Cramming more components onto integrated circuits.” He said :

“ The complexity for minimum component costs has increased at a rate of roughly a factor of two per year. Certainly, over the short term, this rate can be expected to continue, if not to increase. Over the longer term, the rate of increase is a bit more uncertain, although there is no reason to believe it will not remain nearly constant for at least 10 years ”

Moore understood where technology was going and his prediction remains accurate to this day: the processor inside the first iPhone was already a million times faster than the ones that brought humans to the moon and this continues improving. The technology in your pocket will be so much more advanced in a few years that picturing the state of the art of tech 30 years from now is uncertain.

Moore understood where technology was going and his prediction remains accurate to this day: the processor inside the first iPhone was already a million times faster than the ones that brought humans to the moon and this continues improving. The technology in your pocket will be so much more advanced in a few years that picturing the state of the art of tech 30 years from now is uncertain.

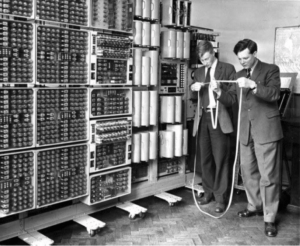

But we don’t have to look that far into the future. Let’s look at today. We have created what has never been created: an entity able to process thousands of bits per millisecond, a calculation powerhouse that fits in our pockets and is constantly evolving. It’s actually learning from past human experiences, just like another person.

With this technique, commonly known as machine learning, computers have taught themselves disciplines that take humans a lifetime to master. In 1997 IBM’s DeepBlue supercomputer was able to defeat chess world champion Kasparov and in 2015 Google’s AlphaGo, after teaching itself in four hours the Chinese game of Go, considered one of the most complex ever created, beat Go’s European champion Fan Hui.

The concept of robots harming human supremacy has been a staple of fiction since the early part of the 20th century. A dystopic future in which machines start to gain sentience and try to take over the world was theorized in 1942 by Isaac Asimov inside his classic “I, Robot” where Asimov formulated his famous “ Three Laws of Robotics ”:

First Law

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

Second Law

A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

Third Law

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Why would a robot harm anyone intentionally? The reason Asimov thought of these “laws” is the same that makes us give human feelings to dogs, cats, or birds: we humanize entities around us. And what would be the best object to humanize other than one that we created, and have the ability to give intelligence to? What would stop progress from giving feelings to a robot? What would stop an engineer to program a machine to feel pain, and therefore seek protection from it? The answer to these questions is: nothing.

Progress moves at a pace that’s hard to follow, and, one day or another, a big company, or a guy in his garage – though much likely the former — will make a great breakthrough, maybe one of the greatest of human history; imbuing conscience to a machine.

This will have dramatic implications on future society, leading maybe to a technological singularity? And that will raise am even bigger question. If a machine can feel joy, can be bored, angry, fall in love, or if it can feel pain, how will it react to say being exploited in factories as humans do with each other today? If it can feel human feelings, could it not demand privileges reserved for humans?

That raises the final question: How will we deal with AI rights?

============

This Carl Kruse blog home page is at https://carlkruse.at

Contact: CARL at CARLKRUSE dot COM

Another post by Vittorio Compagno focuses on a possible new space race.

Always active on the blog are the crypto discussions that arise here.

The bigger question perhaps is how do we deal with human rights?